Protecting our privacy can impact our general health and wellbeing more directly than most of us realize. We are in the midst of ethical and legal discussions that will shape our future attitude towards data privacy. But an important point is left out from most arguments: That data privacy can be a powerful social determinant of health with potentially the greatest impact on the most vulnerable in a society.

Privacy advocates argue for the basic desire of individuals to be left alone. Others promote the idea that as owners of their data, individuals should financially benefit from when their information is shared. And a relatively new concern, shared by opposing groups, focuses on the profiling of individuals based on their political views, making them targets for manipulations, and ultimately threatening democratic processes worldwide. Counter arguments usually focus on logistical challenges of giving individuals control over every piece of data, possible risks to research, technological innovation and business models, and even the pessimistic opinion that most of our data already exist in the public domain and it is too late to implement change. Each of these arguments deserve time and attention, but none considers the potential impact on public health and health promotion.

In population-based research we aim to obtain the largest possible sample size that best represents the population, while keeping the study feasible with limited resources. This ensures that our data analyses are powerful and our results are reliable. In fact, the need to repeat the study with a larger data set is a common conclusion shared by many research papers. With new privacy rules taking effect in Europe and around the world, concerned researchers argue that obtaining consent from participants whenever their data are collected and used would limit future research and ultimately hinder scientific progress and quality of medical care. Some arguments mention the need to use patient identifiers to cross link different data sources and to clean duplicate entries, or to use health records and cancer registries for new research. These are important concerns, but we also need to remember that as a result of some misguided and some purely unethical research practices in history, human subjects research now has strong ethical guidelines and principles in place. With institutional review boards, funding organization requirements, as well as scientific journal requests, public health and clinical researchers have long been aware of the need to obtain consent and protect participant privacy. At the end of the day, the common consensus is that we can do both, protect participant privacy and support high quality research.

There is however another area where data privacy, or lack of it, may have greater impact on public health. The World Health Organization defines social determinants of health as ‘conditions in which people are born, grow, live, work and age’. The idea is based on our knowledge that the distribution of money, power and access to resources such as education, employment, housing, and health care play a significant role for health inequities within or between populations. Traditionally, we look at inequalities that rise from an individual’s current position in a population. But predictive analytics from aggregated data categorizes individuals not only based on their current status, but also future potentials (such as potential liability risk or as future clients). The widespread use of digital technologies, wearable devices, remote services, as well as introduction of thousands of health and wellness apps, combined with data from social media, e-commerce sites and online activities create a new front where massive amounts of data are produced and shared across multiple industries. A new study published in the British Medical Journal explored data sharing practices by top medicine related apps and discovered an astonishing network potentially receiving this information. Many times consumers share their information with specific apps on their cell phones, considering only risks linked to using that particular app. Few are aware that the information they provide is exchanged with other companies, aggregated into bigger data sources, and ultimately used for creating profiles about them. This type of profiling is not limited to age, gender, race, or income, but include a much larger set of categories about our daily choices. Most companies use profiling information to reach potential customers with targeted advertisements or relevant offers. Others use it to revise services or to decide on a specific price for that individual consumer. This type of profiling can impact the offers and opportunities an individual will receive and the choices he or she will make. Furthermore, any data gathered and entered into analyses without the individual’s explicit consent, are likely to be irrelevant, noisy, and of low quality. Conclusions that are drawn from these analyses are inherently unreliable and should not impact an individual’s access to critical resources. When companies use these data to shape offers or decide on eligibility factors, they should, at a minimum, inform these individuals and give them a chance to correct errors. Yet, this is not what is happening.

Let us look closer how personal data are currently being shared across industries with some examples from recent headlines that do not attract much attention, but are in the news almost on a daily basis. We recently read that our social media posts can alter our life insurance premiums. Early in 2019 New York State published a guidance that allows life insurance companies to use social media posts and other external data sources, such as algorithms and predictive models, to calculate insurance premiums, if they can prove that it does not unfairly discriminate against certain customers. This may help you if you consistently share Instagram posts reflecting the healthy lifestyle choices you make or the marathons you finish. But, if your social media posts link you to some risky behaviors or possible health challenges, you may end up paying more for your premiums.

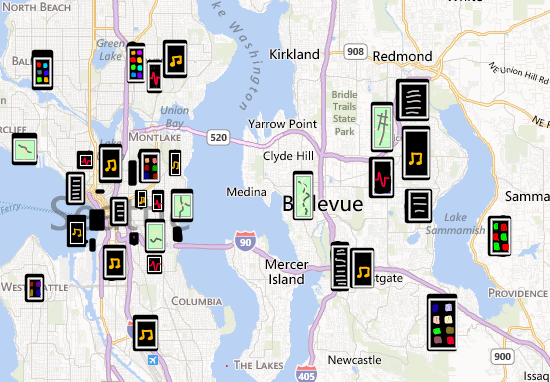

Who we are as a person can be hidden in the information where we spend most of our time. Sharing our geolocation data, the real-time information at what geographic coordinates we are, reveals our whereabouts while also potentially puts our safety at risk. Can it impact our access to important opportunities and resources, such as employment or housing? In a New York Times story published in December 2018, a striking interactive map showed daily paths of millions of anonymous cell phones in New York City. This map, made of geolocation data received from each phone, showed to the minute details where these phones (or individuals carrying them) had been at each hour of the day and night. It turns out, very few people are aware that their phones routinely broadcast their exact geolocation and even fewer are aware that this information is being sold to different companies. Once you follow the map and see the exact locations and addresses of these cellphones, it is not difficult to identify individual users. Those who were identified and interviewed by the New York Times, such as a middle school teacher, expressed great surprise and concern. When made aware of the extent of data sharing, many users prefer to turn location services off. But that may not be enough. Even when location services are off, your phone may still be tracking your location.

Another headline tells us who specifically might seek geolocation data: a bounty hunter for example, who wants to locate an individual or a lost cell phone using data produced by telecommunication companies. Location data can put an individual under serious safety risk. Strava, an app used by the runner community, was under the radar when it was discovered that this app was routinely broadcasting exact geolocation of all of its users, including those of US military personnel deployed overseas. Based on these soldiers’ exercise routes, anyone could draw a map of the US military bases where they were deployed, which in the article was in Afghanistan.

In public health and medical research we have clear guidelines describing how participant pictures can be taken and how they should be used in publications. But commercial entities seem to lack these guidelines. A new controversy emerged over whether or not facial images are personal data and if they could be considered de-identified if there are no names attached. We learned that IBM obtained millions of user photos from the photo sharing app Flickr and used them for facial recognition technology. These facial images were under the Creative Commons license and it was argued that they could be reused without additional permission from the owners. Few resources can identify a person as clearly as a facial image. A bipartisan group of lawmakers recently introduced the ‘Commercial Facial Recognition Privacy Act of 2019’ to block companies from using facial images without users’ consent.

There is an additional layer of concern in public health that we need to pay attention to. This is when personal data and profiling are used to alter human behavior. Big store chains, such as Kroger and Walgreens, are reportedly testing a new AI technology that can help them improve their marketing and sales numbers. Small cameras placed in grocery stores observe shoppers, evaluate their age, gender, and current mood, mainly to present them with targeted advertisements while in the store. The main focus of this case is not to promote healthy choices or to improve public health, but to provide targeted advertisement and increase sales. Let us assume that your doctor told you to limit your sugar consumption but you spent a little longer in front of the ice cream section debating whether or not to purchase one. With this new technology you could find yourself seeing a few more ice cream ads before reaching the check-out line. This attempt to modify consumer behavior needs to be seriously examined by public health officials and perhaps even explored as a tool to promote healthy behaviors.

These recent examples show us that a wide range of our data are already being shared without our knowledge or consent and used in ways that may not only impact our future opportunities, but are designed to understand and modify our own choices and behavior. The implications from a public health perspective are significant. As we are still discussing whether data privacy is a human right or not and whether there is any point in protecting it, we urgently need to shift gears to focus on educating and empowering users, giving them control over who receives which of their data for what purposes. And finally, discussions about data privacy should not be viewed purely as a consumer protection issue, but also as a significant opportunity to protect and promote public health.